This is a strange timeline. Just a little bit over a year ago, I wrote about how I recently shifted some personal service onto Oracle’s Cloud, which had the most generous free tier available. I did this because self-hosting my own infrastructure seemed silly.

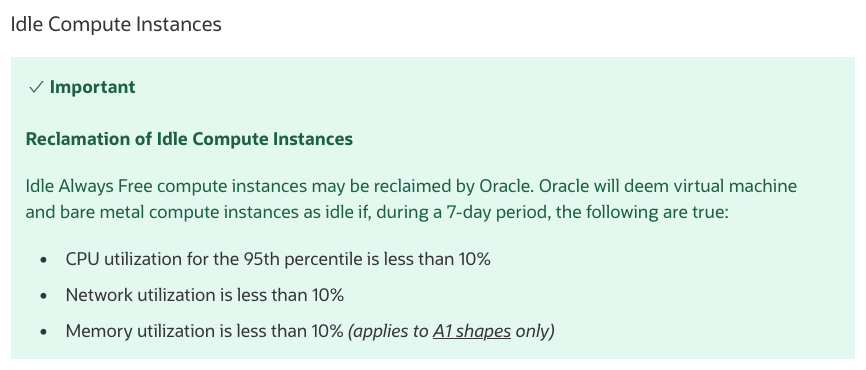

A few days ago, while filtering out spam, I notice an email from Oracle where they deem my two VM instances as idling because, well, I’ll just show you.

Eesh. Well, Oracle is often times referred to as the IT world’s Darth Vader after suing Google over Android, so this isn’t surprising. Fortunately this portrayal allows me to use this meme.

My recourse? Adding a credit card and switching my account from a truly free tier to one where Oracle can bill me if/when I exceed the free usage limitations. Ok, that’s fine, but I also don’t particularly like entities holding my credit card information when I’m just trying to use their free service. And as you the reader might appreciate, avoiding Oracle’s definition of idling requires something to chew up more CPU or network utilization than this small little WordPress blog and the publicly facing personal services I run. Certainly WordPress is a large and complicated CMS in this day and age, but the amount of web traffic required to meet either of the CPU or network utilization thresholds vastly exceeds what I’ve ever seen. Shocking, right?

On one hand, this policy is understandable. Reclaiming the instances in this manner allows for reallocation of my rounding-error of a workload throughout whatever data center they live in and there’s great reasons for this. And on occasion, Oracle has moved my instances onto different hardware. Downtime is acceptable at this price so this never caused any concern. Indeed, that’s just how hosting works sometimes. This is one reason I didn’t start with self-hosting. Hardware continues to break despite our best efforts. And since my self-hosting needs are so light, it didn’t make sense to use my hardware.

The wrinkle was that not all instances were equally easy to obtain. Oracle’s Ampere A1 instances (VM.Standard.A1.Flex) were substantially faster than the AMD instances (VM.Standard.E2.1.Micro. Substantially faster in that I perceived the speed difference just serving this website! Being superior, these A1 instances are difficult to obtain. Indeed, I couldn’t get one when I first signed up in November 2021 and didn’t have one when I blogged about it. Only in March 2022 did I luck into one when just playing around with Oracle’s console. After just playing around some and noticing the speed difference just moving around the system, I migrated most of my compatible services there. My speed indicators jumped immediately, boosting my PageRank and bringing the riches of readership that I now enjoy.

Even using the much slower AMD instances, I can’t hit Oracle’s thresholds. I’m using a well liked and likely very efficient software stack to serve traffic, and have taken precautions to filter traffic through Cloudflare. Even if I removed their services, the traffic wouldn’t cause even a poorly thought out and inefficient software stack to meet Oracle’s thresholds. And to be frank, tinkering on the AMD instances wasn’t fun. They were substantially slower than anything I’ve used in recent memory, including an HP ProLiant Micro Server N40L.

The answer, after some noodling, was simply to move those services back onto my own hardware. Yes, extreme self-hosting. I already host some personal stuff at home using a Celeron G3900 server living inside a Fractal Design Node 804 case. Here too, the CPU load isn’t too high since it mostly maintains the spinning rust the case houses. But the trick is that my ISP most likely filters or blocks the traffic on ports 80 and 443 to discourage hosting. And these publicly facing services are web ones, so those are key.

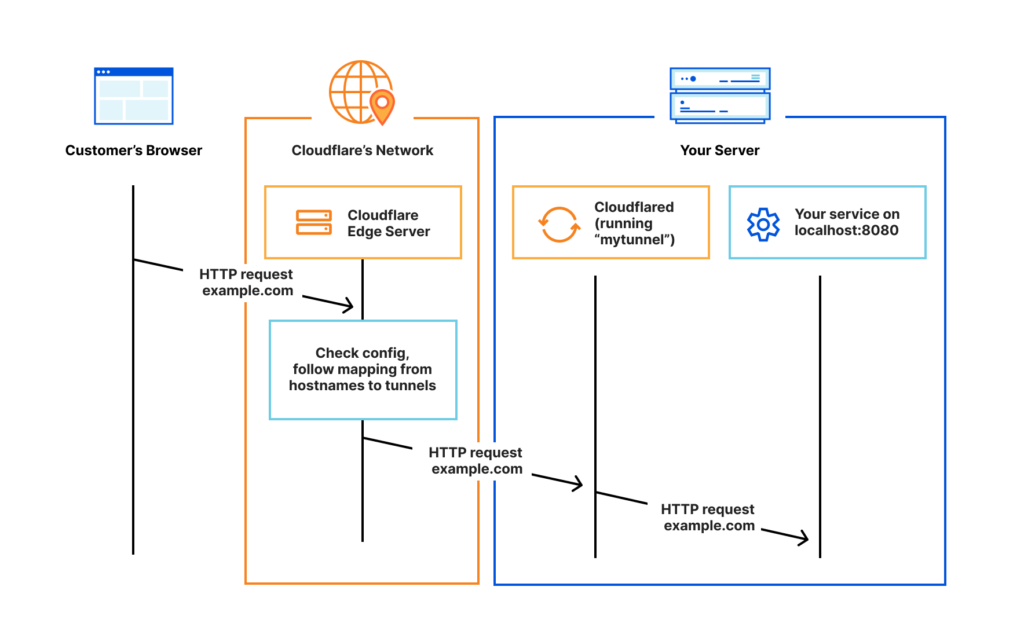

Enter Cloudflare’s Tunnels which have become all the rage in some subreddits. No publicly routable IP address is necessary and instead I run a daemon on the system to create an outbound connection to Cloudflare’s infrastructure. Here’s a picture from Cloudflare themselves illustrating this configuration.

Yes, that means I’m i) executing someone else’s code on my system at the system level to ii) explicitly introduce a middleman between myself and my traffic. But this isn’t different from trusting any other software on my server (e.g., Docker in general, my ad-filtering DNS server), and for my public-facing infrastructure, I’ve already employed Cloudflare to filter out malicious traffic. So employing Cloudflare’s tunnel only slightly changes the security profile while giving the benefit of self-hosting these small, and apparently, unwanted workloads.

So over the course of a few days, I look into what’s necessary to run Cloudflare’s daemon on my hardware. I added their Docker image to my compose file, made some tweaks, and fired things up. After fiddling with a few settings, everything started right up like it did before. Caddy’s configuration didn’t change at all because of the migration. Indeed, none of the containers needed tweaking aside from shifting everything from the default network to a specific network associated with the Cloudflare daemon. And by employing Docker, at least I have some certainty that should someone find a way into the system (e.g., through a supply chain attack on Cloudflare for example), access is restricted by the Docker engine. And I’m still running other Docker containers separately to host services that aren’t public facing.

So instead of relying on a cloud provider to host the infrastructure, I’ve brought it in house. Decentralization is the theme for 2023 it seems, and self-hosting is the way forward.