The day finally arrived. Years after the demise of Electric Objects as a company, my E01 died. This is my remembrance of a long gone hardware startup’s quirky piece of hardware that grew into a treasured window on life.

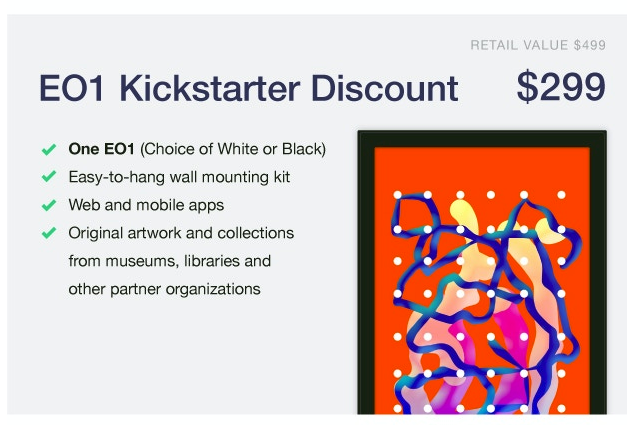

I first heard about the E01 from Kickstarter, back when crowdsourcing was relatively novel and there were more interesting hardware projects. The idea was good: using a large screen to display digital art. As their marketing for the project said, the E01 was “a computer designed to bring the beauty of the Internet into your home.” Indeed, Electric Objects wanted to create a new way to experience art in one’s home.

The sleek and clean look of the hardware embodied what I’d wanted for a while. And this was far simpler than what I had dreamed of. I’d often imagined making something similar with a Raspberry Pi. But the software didn’t exist yet so that would need building. And there wasn’t a way I could make the hardware look this good. Here, someone had done all the work necessary needed and created a device with a different aspect ratio which made for interesting presentation of images. So, I happily pledged for my device at the Kickstarter price.

Electric Objects dutifully delivered on the E01. Looking back, this was a small miracle in of itself. And now, I had a nice app I could use to display a library of digital art in my study easily using a sleek display. And the system included the ability to display my own photography. I was a very happy camper. And things seemed positive overall. The company was successful enough to create a successor, the E02. I didn’t upgrade because the E01 served my purposes perfectly. But the creation of a successor device suggested a viable business existed, at least to this outside observer.

But suddenly, in 2017, Electric Objects shut its hardware business down and sold the app to Giphy. The company would become a cautionary tale about the risks associated with hardware startups. And eventually, Jack Levine, the founder, reminisced about why Electric Objects failed. But the death of the company didn’t kill the device. The E01 continued to serve its purpose. But how long could this device continue to work?

The last day of service, according to Reddit, was June 28, 2023.

It was remarkable service lasted this long. And it was remarkable that the hurdles along the way didn’t kill the service before.

The first hurdle was the lack of app updates after the company’s death. iOS application developers need to keep up with Apple’s API changes or users won’t have access to the app in later iOS versions. Fortunately, throughout the E01’s life, Apple allowed the old iPad to continue receiving photo updates. But the app itself ran afoul of Apple’s guidelines. In short order, I needed to keep an old iPad around running iOS 12 to have a working copy of the Electric Objects app. Whatever changed in iOS 13 prevented the app from running there. This was fine, until my iPad decided to free up space and remove the E01 app. Since the company didn’t exist anymore, the App Store took away downloads of the app. I eventually found a way to reload the app onto the old iPad, and usage was restored. Later, I discovered the website itself included nearly all the app’s functionality.

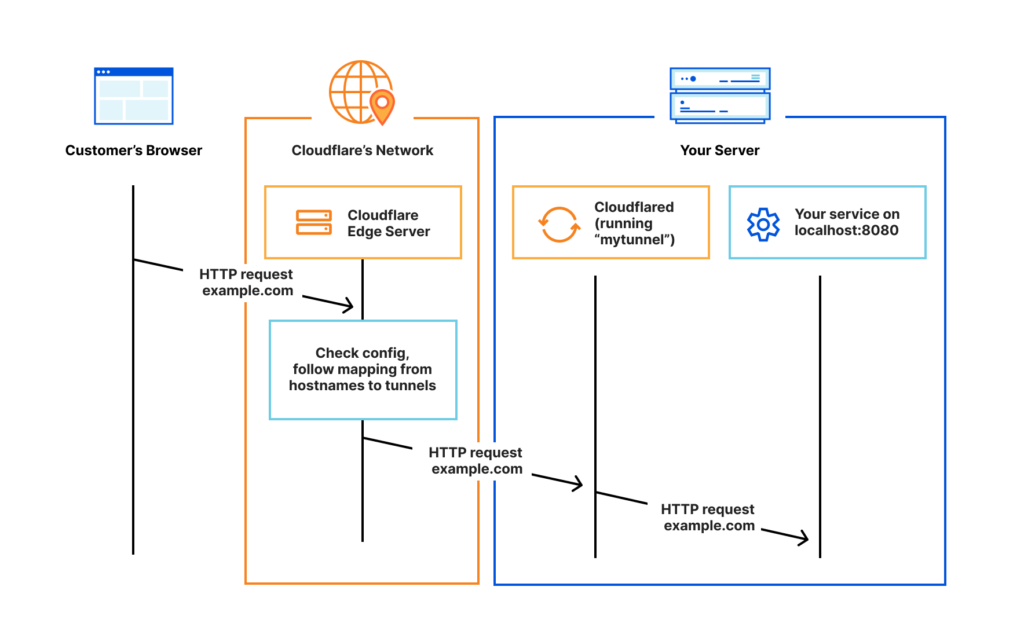

Other hurdles were outside of my realm of control. There’d be intermittent issues with the server infrastructure, which would cause the screen to freeze or fail to load new content. Indeed, before its final days, my E01 had experienced glitches where the images didn’t rotate. But inevitably, some helpful soul would fix things and restore service. I like to imagine that someone had a computer hiding under their desk hosting all of the content. But we may never know.

Aside from software issues, the hardware itself struggled against time. Last year, my screen started experiencing some strange flickering in the corner, and then refused to boot. Several hours of research later, I placed an Amazon order for a replacement AC adapter. Someone had explained the AC adapter originally included with the device could fail and result in a boot loop. And this gave the E01 another span of faithful service.

I wasn’t the only one still loving the device. Through the years, a small subreddit of other E01 and E02 users coalesced and provided solutions to the problems I encountered. Although the community was small, we persisted.

With its recent final death, I come to realize how important the E01 had become. I initially displayed images curated by Electric Objects. They included interesting digital art, some with subtle movement details, that utilized the digital frame in an interesting way. This made for a nice conversation piece when people came over. But life changed. My new wife lamented the use of space for a display of “weird pictures,” so pictures of us started appearing. Thank you Apple for continuing to allow old iOS versions receive picture updates. This calmed her purging urges.

Then the pictures became pictures of our family with the arrival of my daughter. Instead of being a lamented use of space, the E01 became a favorite piece displaying pictures of her growth. And here, the unique aspect ratio of the E01 helped highlight some of the library of pictures of her growth in a different and interesting way. And as she grew up, my daughter came to recognize pictures of herself at a much younger age.

Now, with the death of Electric Objects, our family’s digital picture frame is gone.

Goodbye old friend, my E01. It was great while it lasted. Thank you Jack Levine along with the whole Electric Objects team for bringing the E01 to the world. Thank you to the anonymous people who kept the infrastructure running. And thank you Apple for continuing to give iOS 12 photo updates.